Before the read

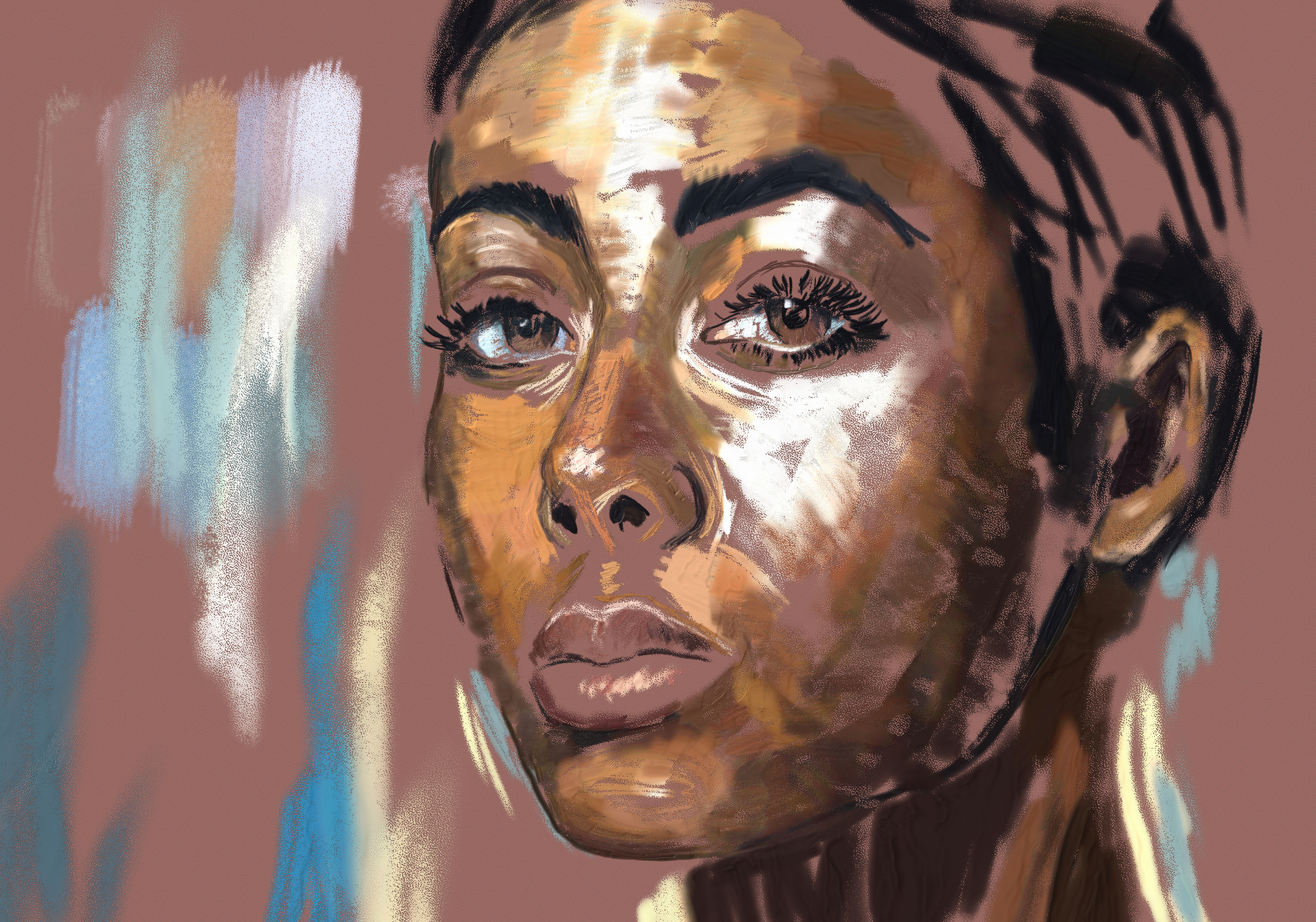

AI tools are creating art at scale, but many artists say it’s costing them more than just exposure.

AI scraping pulls images from the web to train AI models—often without asking or crediting the original creators.

From class action lawsuits to new tech defenses, artists are stepping up to protect their creative rights.

As AI image generators gain ground, artists are calling out the theft of their work and fighting to protect their creative rights.

Today, artificial intelligence (AI) is more relevant than ever. From laudable innovations to ethical conundrums, the rise of AI has marked a huge leap in technological advancement and innovation. Still, certain demographics are bearing the brunt of AI’s continued growth. Today’s artists face the constant risk and reality of AI scraping their works without their consent and presenting them as original, often without any attribution.

AI Tools Accused of Infringing on Artists' Rights

While AI is praised by some as a driver of creativity and innovation, for others—especially artists—it’s having the opposite effect. Many AI tools have been accused of stifling creativity through the unauthorized use of artists’ work, including:

- Midjourney: Midjourney is an AI image generator that creates images based on textual prompts. Various artists have spoken up about their work being used to train Midjourney’s AI models without their consent, leading to unauthorized reproductions of their art without credit.

- DreamUp by DeviantArt: DreamUp is an AI-based image generation tool developed by DeviantArt. Like Midjourney, it also generates AI-created images based on textual prompts and has been accused of using artists’ works to train its AI models without consent.

- Stable Diffusion by Stability AI: Stable Diffusion is another AI tool that uses textual prompts to generate AI-created images. It has also been accused of scraping artists’ work without their consent.

- DALL·E by OpenAI: DALL·E is also an AI tool that creates AI images using textual prompts. DALL·E has been trained on large datasets, including images found on the internet, without regard for copyrights and creator consent.

Legal and Ethical Implications

Among the AI tools mentioned above and others not listed in this article, common denominators are their reliance on data compiled from the internet and the lack of transparency and consent in their compilation and training processes. This has far-reaching legal and ethical implications for all stakeholders, particularly in the areas of intellectual property rights, freedom of expression versus creator protection, and economic impact.

- Intellectual Property Rights

Creators are using the law to push back against AI’s scraping of their works, hoping to leverage legal action to stop AI companies from using their work to train AI models without their permission, and therefore prevent the trained AI models from reproducing images in the style of or substantially similar to their works. These Creators are arguing that this is an infringement on their right to control the creation of derivative works, which are new creations based on preexisting works that have been adapted, transformed, or recast.

On the other hand, the AI developers and companies are claiming that their use of images and other copyrighted works scraped off the internet falls under transformative use, which is a type of fair use where the copyrighted work is used in a different manner or for a different purpose than the original.

- Freedom of Expression v Creator Protection

AI proponents argue that restricting AI’s ability to scrape information is a restriction on innovation and freedom of expression. However, artists argue that this scraping of information is an infringement on their rights to control and profit from their creations.

- Economic Impact

The use of AI-generated art can devalue original artworks, which can significantly impact artists’ income. Additionally, in a world where AI generators are able to create works similar to those in existence, incidents of piracy are bound to occur.

How Artists Are Fighting Back

Various artists are employing different strategies to challenge and regulate the use of AI regarding their works and creative rights. Some steps being taken include:

- Legal Action

Artists have taken legal action against various AI companies for scraping their art without consent. There is currently a class action suit against Midjourney, StabilityAI, and DeviantArt, with the artists bringing the action alleging that these companies have “infringed the rights of millions of artists by training their AI tools on five billion images scraped from the web without the consent of the original artists.”

- Tech Tools

Tools like Glaze and Nightshade are helping creators to protect their works. Both tools, developed by teams at the University of Chicago, use unique measures to protect artworks from scraping by AI. Glaze applies subtle alterations to images on the internet, making it difficult for AI to replicate an artist’s style. Nightshade, on the other hand, turns images into “poison” samples, leading the AI models training on them without consent to learn unpredictable behavior, which will in turn affect their output. According to the Nightshade team, Glaze is primarily a defensive measure, while Nightshade is primarily an offensive measure. Ultimately, both tools enable creators to protect their original works from being used without their consent.

Platforms like Cara are providing a space for artists to showcase their works without fear of AI. With built-in protections against AI scraping and a filter to prevent AI-generated images from getting uploaded on the site, Cara is giving artists a safe space to showcase their creativity.

Conclusion

As technology keeps evolving, it is important to find a balance between innovation and the protection of artists’ rights. To address the resulting issues, there is a need for an industry-wide conversation on ethical guidelines for AI development and usage to ensure that technological progress does not come at the expense of artists’ livelihoods.

More by this author

The Wrap

- Many AI image generators are trained using publicly available art without artist consent, sparking controversy over AI art theft.

- Tools like Midjourney, DALL·E, and Stable Diffusion face backlash for suspected unauthorized scraping of artists’ works.

- Legal battles—including a class action lawsuit—aim to define the boundaries of copyright and fair use in the age of AI.

- Some artists are using tools like Glaze and Nightshade to make their art unreadable or unusable by AI models.

- Platforms like Cara provide safe digital spaces for artists to showcase work free from AI scraping concerns.

- The debate over AI vs artists reveals deeper ethical issues about innovation, ownership, and creative control.

- Moving forward, balancing technological progress with creator protection is essential for a sustainable digital art ecosystem.